MongoDB (from hu-mongo-us) is an open-source document-oriented database option for modern developers. Classified as a NoSQL database program, MongoDB uses JSON-like documents instead of a typical relation database. MongoDB is amongst the most common NoSQL databases today.

You don’t work with rows and tables in Mongo. Instead, you work with documents and collections (sets of documents). Documents contain JSON hashes, so any data that can be represented as a hash can easily be stored using it.

First introduced in 2009, it was designed as a scalable document storage engine. Mongo is a schemaless database, meaning there’s no requirements nor enforcement about the structure of the data in a document. You can however add enforcements and validations to MongoDB using modules, other libraries, or your own code if you like.

In contrast to SQL data systems, Mongo can be scaled across a group of servers easily. Unlike typical SQL db’s, which usually exist on a more finite number of servers (which scale vertically), it takes awhile to sync the data across this group of servers. Mongo is able to handle massive data sets quickly and efficiently, but they do this by sacrificing immediate consistency. Standard SQL services are more accurate more quickly, but they can become a bottle-neck and have performance issues at high volumes. Many people also find it way more familiar on a conceptual level to interact with SQL ORMS during the development process. Lastly some data sets lend themselves to a more normalized manner, and because of this MongoDB might not be a good fit for that data.

Install MongoDB

Install MongoDB using Homebrew:

$ brew install mongodbStart up a MongoDB services locally:

$ brew services start mongodbIf you want to stop it at anytime, you can:

$ brew services stop mongodbStart a new rails project (I’m using 5.1.4), without using Active Record (Bye Felicia!)

$ rails new mongo-bicyles --skip-active-recordSetting up Mongoid in Rails

Add the Mongoid gem to the bottom of your Gemfile.

gem "mongoid", git: 'git@github.com:mongodb/mongoid.git'Then run bundle install:

$ bundle installInit your app as a repo:

$ git init...

$ git add remote origin...Just like when you use a relational db like Postgres, or MySQL, you need a configuration file. Mongoid installs a custom Rails generator for us:

$ rails generate mongoid:configOpen up the file it creates, located at config/mongoid.yml and take a look. Check out the different options you could configure. I removed all the comments for the code block below. For this intro I’m not going to change anything in the config file.

development:

clients:

default:

database: mongo_bicyles_development

hosts:

- localhost:27017

options:

options:

test:

clients:

default:

database: mongo_bicyles_test

hosts:

- localhost:27017

options:

read:

mode: :primary

max_pool_size: 1At this point, I like to run $ rails s -p 5678 and make sure the server kicks over without any issues. If it does, check in what we’ve got so far.

$ git add .

$ git commit -am "first commit for Rails using MongoDB project"Using Mongoid in Rails

Since this is just a demo, we can use Rails’ scaffolding generators to get us started quickly. (p.s. I never do this in a production project)

$ rails generate scaffold bicycle brand serial_number manufacturer countryLet’s see what was created with the scaffold:

$ git statusSpecifically lets checkout the model that was generated in: app/models/bicycle.rb

class Bicycle

include Mongoid::Document

field :brand, type: String

field :serial_number, type: String

field :manufacturer, type: String

field :country, type: String

endThat doesn’t look like a typical Active Record Model! MongoDB doesn’t have a database schema, so you will notice that there are no database migrations. If we want a new field, we could just add one in the model and add it to our views. Migrations can still be used, but they’re for data migration or transformation, not changing the underlying structure of the database.

Let’s explore what the rest of this data looks like via scaffold we just created.

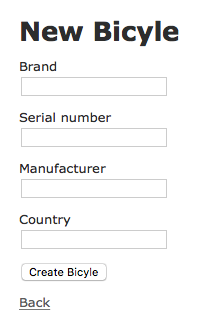

$ rails s -p 5678Visit the scaffolded route http://localhost:5678/bicyles There we see the good ole’ Rails CRUD forms.

Understanding Documents in MongoDB

Let’s add a a bicycle, and look at the URL in the show page. The url contains a param with a weird set of numbers and letters http://localhost:5678/bicyles/5a387386f6ec12c032043f01 We’re used to seeing auto incrementing integer IDs there, the ones created for us by the database. Usually each item is added as a row to a table, which increments the row counter by 1. Each row has a locally unique sequential identifier.

MongoDB uses a gnarly looking alphanumerics hashes to create an object id like “5a387386f6ec12c032043f01”. This object id is always 12 bytes and is composed of: a timestamp, client machine id, client process id, and a 3-byte incremented counter.

Let’s peer under the hood of the MongoDB on the CLI, and check out a document. We can do this by first looking in our config/mongoid.yml for development.clients.default.database. This application uses the database: mongo_bicycle_development

mongo mongo_bicycle_developmentEven though there is no Structure Query Language, you sill have access to a special command language.

Let’s take a look at the collections in the database:

>show collections

bicyclesYou can find a record using this language, the format is db.[collection].find() This command returns all documents in a given collection. Since we only added one, that’s all we get.

>db.bicyles.find()

{ "_id" : ObjectId("5a387386f6ec12c032043f01"), "brand" : "Kona", "serial_number" : "1245-ABCD", "manufacturer" : "We Made IT", "country" : "Tawain" }Mongo stores data as JSON, and uses JavaScript here in the command line tool.

> typeof db

object

> typeof db.bicycles

object

> typeof db.bicycles.find

> functionHandy hint if we want to see what the code for a Mongo command looks like, we can call the function without parenthesis.

> db.bicyles.find

function (query, fields, limit, skip, batchSize, options) {

var cursor = new DBQuery(this._mongo,

this._db,

this,

this._fullName,

this._massageObject(query),

fields,

limit,

skip,

batchSize,

options || this.getQueryOptions());

{

const session = this.getDB().getSession();

const readPreference = session._serverSession.client.getReadPreference(session);

if (readPreference !== null) {

cursor.readPref(readPreference.mode, readPreference.tags);

}

const readConcern = session._serverSession.client.getReadConcern(session);

if (readConcern !== null) {

cursor.readConcern(readConcern.level);

}

}

return cursor;

}When using MongoDB might be a good idea?

Do you have a very rapidly scaling systems with high volumes of traffic?

MongoDB is designed to be scalable and flexible, but remain familiar enough that application engineers can easily pick it up. Working with Mongo is mostly the same as working with a traditional relational database managements systems. You can’t however do server-side JOINs between two sets of data.

Because it can’t do server-side JOINs, the relationships between different objects (Users have Posts, Posts have Comments, Comments have Users) can be tricky, as you need to set up those relationships in the model. If you have a high volume but low level of complexity in data, or the access pattern of your application is a lot of READs and few writes, than this could be a good paradigm for you to embrace. If you have a data team and a group of engineers who are fluent in managing big data, then you should also probably embrace it (or another NoSQL alternative).

When might it be a bad idea?

How MongoDB encourages de-normalization of schemas. This might be a too much for other engineers or DBA’s to swallow. Some people find the hard constraints of a relational database reassuring and more productive. Having worked on projects with rapidly evolving data, I can see the need for a more structured approach.

Although sometimes restrictive, a database schema and the restraints it places on our data can be reassuring and useful. While MongoDB offers a huge increase in scalability and speed of record retrieval, its inability to relate documents from 2 different collections — the key strength of a RDBMS — makes it often not the best case for a CRUD-focused web application.

Because MongoDB is focused on large datasets, it works best in large clusters, which can be a pain to architect and manage.

It can also lead to a lot of headaches if not applied properly (e.g. you are trying to enforce principles used in RDBMS), or used to early (i.e. for a system that doesn’t have super high traffic, but might at some point in distant future). There are threads all over describing bad experience like this.

Some helpful resources for next steps

Mongoid Gem (the official MongoDB pages):

Why I think Mongo is to Databases what Rails was to Frameworks

Old School Ryan Bates Rails-cast on Mongoid

The book ‘The Imposter’s Handbook’ by Rob Conery has also been a great help in understanding databases and the theory and applied knowledge of big data.